Contents

Route tables (NAT, HTTP, DNS, IP and OSI Network)

RESTFul Web Services (XML, JSON)

Public key encryption, SSH, access credentials, and X.509 certificates

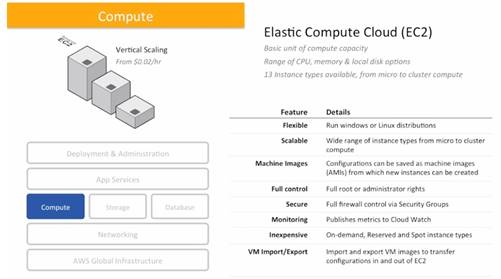

Amazon EC2 (Elastic Compute Cloud)

EC2 Instance store volumes (ephemeral drives)

Elastic Batch Store (EBS) and Databases on EC2

Amazon VPC (Virtual Private Cloud)

Security � Security Groups and Network Access Control List (ACL)

Elastic Network Interfaces (ENI)

Amazon EBS (Elastic Block Store)

CloudFront Slow-Start Optimization

Cf_dg_CloudFront_Developer.pdf

Serving Private Content and Accessability

Running the Lab (starts on page 11)

Amazon RDS (Relational Database Service)

Deployment, Administration, Management

AWS IAM (Identity and Access Management)

AWS CloudHSM (Hardware Security Modules)

Amazon EMR (Elastic MapReduce)

Amazon SWF (Service Work Flow)

Amazon SQS (Simple Queue Service)

Additional Software and Services

Architect on AWS course/labs

EC2, VPC, EBS, S3, Route 53, IAM, RDS, SQS, DynamoDB, �

Excluded: RedShift, SES, OpsWork

Carefully study the FAQ section for these services

http://aws.amazon.com/training/self-paced-labs/

From AWS_certified_solutions_architect_associate_blueprint.pdf file:

�AWS Knowledge

Hands-on experience using compute, networking, storage, and database AWS services

Professional experience architecting large scale distributed systems

Understanding of Elasticity and Scalability concepts

Understanding of network technologies as they relate to AWS

A good understanding of all security features and tools that AWS provides and how they relate to traditional services

A strong understanding on how to interact with AWS (AWS SDK, AWS API, Command Line Interface, AWS CloudFormation)

Hands-on experience with AWS deployment and management services

General IT Knowledge

Excellent understanding of typical multi-tier architectures: web servers (Apache, nginx, IIS), caching, application servers, and load balancers

RDBMS (MySQL, Oracle, SQL Server), NoSQL

Knowledge of message queuing and Enterprise Service Bus (ESB)

Familiarity with loose coupling and stateless systems

Understanding of different consistency models in distributed systems

Experience with CDN, and performance concepts

Network experience with route tables, access control lists, firewalls, NAT, HTTP, DNS, IP and OSI Network

Knowledge of RESTful Web Services, XML, JSON

Familiarity with the software development lifecycle

Work experience with information and application security including public key encryption, SSH, access credentials, and X.509 certificates

Other Study Tips:

- VPC config and troubleshoot, IP subnetting. VPC VPC VPC Must Excel!

- Use Cases for SWF, SQS and SNS

- ELB interactions with auto-scaling

- S3 security use cases

- EBS vs ephemeral storage for EC2 instances

- CloudFormation basics

- EBS config and snapshots for I/O performance and durability

-

�

|

|

Filename |

Topics |

|

Y |

01_Hands_On_IAM_wb.pdf |

IAM |

|

Y |

02_Hands_On_EC2_wb.pdf |

|

|

Y |

03_Hands_On_EBS_wb.pdf |

|

|

Y |

04_Hands_On_S3_wb.pdf |

S3 |

|

Y |

05_Hands_On_VPC_wb.pdf |

|

|

Y |

07_Elastic_Load_Balancing.pdf |

EC2, ELB |

|

Y |

08_Auto_Scaling.pdf |

EC2, ELB |

|

Y |

AWS IAM Lab.pdf |

IAM |

|

Y |

AWS Certification - Web Video Training.docx |

Web Videos |

|

Y |

AWS_Amazon_SES_Best_Practices.pdf |

SES |

|

|

AWS_certified_solutions_architect_associate_blueprint.pdf |

|

|

Y |

AWS_certified_solutions_architect_associate_examsample.pdf |

|

|

Y |

AWS_Cloud_Best_Practices.pdf |

Background |

|

Y |

AWS_Overview.pdf |

Background |

|

Y |

AWS_Risk_and_Compliance_Whitepaper.pdf |

|

|

Y |

AWS_Security_Best_Practices.pdf |

|

|

|

AWS_Security_Whitepaper.pdf |

|

|

Y |

AWS_Storage_Options.pdf |

S3, Glacier, EBS, EC2 Instance Storage, AWS Import/Export, Storage Gateway, CloudFront, SQS, RDS, Dynamo DB, ElastiCache, Redshift, Databases |

|

Y |

AWS_Storage_Use_Cases.pdf |

S3, EC2 Instance, EBS, CloudFront, SimpleDB, etc. |

|

|

AWS_Web_Hosting_Best_Practices.pdf |

|

|

|

AWSImportExport-dg.pdf |

Import Export |

|

Y |

aws-cli.pdf |

CLI |

|

|

awseb-dg.pdf |

Elastic Beanstalk |

|

Y |

awssg-intro.pdf |

|

|

Y |

cf_dg.pdf |

CloudFront |

|

Y |

cfn-ug.pdf |

CloudFormation |

|

Y |

dc-ug.pdf |

Direct Connect |

|

F |

dynamodb-dg.pdf |

DynamoDB |

|

Y |

ec2-ug.pdf |

EC2 |

|

Y |

elasticache-ug.pdf |

ElastiCache |

|

Y |

govcloud-us-ug.pdf |

GovCloud |

|

Y |

rds-ug.pdf |

RDS |

|

- |

Redshift-gsg.pdf |

Redshift |

|

- |

Redshift-mgmt.pdf |

Redshift |

|

Y |

Route53-dg.pdf |

Route 53 |

|

Y |

s3-dg.pdf |

S3 |

|

Y |

sns-dg.pdf |

SNS |

|

|

sqs-gsg.pdf |

SQS |

|

|

swf-dg.pdf |

SWF |

|

Y |

SampleQuestions.docx |

|

|

Y |

Sample Questions for Amazon Web Services Certified Solution Architect Certification.docx |

|

|

|

storagegateway-ug.pdf |

Storage Gateway |

|

Y |

Studynotes.docx |

|

|

Y |

vpc-ug.pdf |

VPC |

|

|

|

http://www.jamiebegin.com/tips-for-passing-amazon-aws-certified-solutions-architect-exam/

http://nitheeshp.tumblr.com/post/61394863836/aws-certified-solution-architect-exam-tips#!

http://www.cloudtrail.org/blog/339amazon-route-53-easy-example/

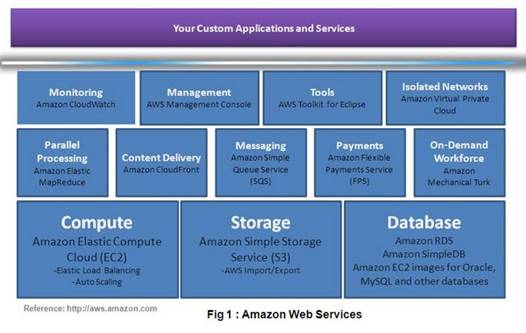

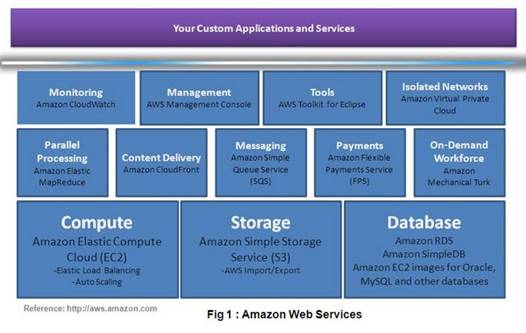

Benefits of Cloud:

- Almost zero up front infrastructure investment

- Just-in-time Infrastructure

- Effiecient resource utilization

- Usage-based costing

- Reduced time to market

- Auto-scaling / Proactive Scaling (possibly infinite scalability)

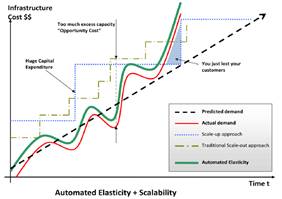

- Elasticity:

-

- Automation (scriptable infrastructure)

- Efficient Development lifecycle

- Improved Testability

- Disaster Recovery and Business Continuity

- Elastic IP addresses � allocate static IP address and programmatically assign it to instances

Security Notes:

- AWS is certified and accredited = ISO 27001 certification

- Physical security = knowledge and locations of data centers is limited and locations physically guarded in variety ways

- Secure services = SSL / encryption

- Data privacy = encryption

Best Practices:

- Design for failure � assume the worst, that servers will fail and datacenters lost

- From a design for failure, we see focus on redundancy, data recovery, backup, quick reboot, etc

- Failover gracefully using Elastic IPs � dynamically re-mappable so you can quickly remap to another server

- Utilize multiple Availability Zones � spreading out the datacenters for redundancy

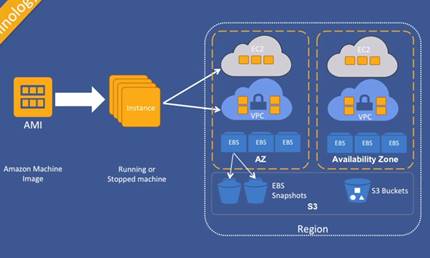

- Maintain an Amazon Machine Image (AMI) to restore to � all subsequent are clones of this (virtualization)

- Utilize Amazon CloudWatch for visibility on hardware failures or performance degradation

- Utilize Amazon EBS to setup cron jobs for incremental snapshots in S3 � data persistence

- Utilize Amazon RDS for data retention and backups

- Decouple components

- Implement Elasticity

o Proactive cyclic Scaling (daily, weekly, monthly)

o Proactive event-based scaling

o Auto-scaling based on demand

- Think Parallel

Elastic = ability to scale computing resources up and down easily, with minimal friction. Helps avoid provisioning resources up front for projects with variable consumption rates or short lifespans. Elastic Load Balancing and Auto Scaling automatically scales your AWS cloud-based resources up to meet unexpected demands and then scale back down when the demand decreases.

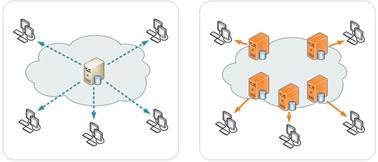

Content Delivery Network � large distributed system of servers deployed in multiple data centers across internet with goal to serve content with high availability and performance. (e-commerce, live streaming, social).

Traditional vs CDN:

Operates as Application Service Provider (ASP) on internet � top ones are Microsoft Azure and Amazon CloudFront.

http://en.wikipedia.org/wiki/Content_delivery_network

Amazon CloudFront is a CDN web service that integrates with AWS. Requests for content are automatically routed to nearest edge location (high performance).

http://aws.amazon.com/cloudfront/

�

�

Transmission Control Protocol (OSI Layer 4) = created by DARPA as part of ARPNET for real time communication

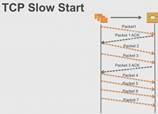

TCP works by sending a single packet and waiting for AWK, at which point it will send twice as many packets and wait for the next AWK. It keeps doubling per each successful AWK until there was disrupt or loss data, at which it starts back at one packet.

IP = 123.123.123.123 address for any device on network

Subnet Mask = way to organize network into access / viewable groups (can only view what is in your group). Devices in the same network still cannot connect to each other without a subnet mask. Class C = 255.255.255.0 and Class B = 255.0.0.0 (more address).

Default Gateway = when a device cannot be found, the user device will then go to the default gateway. This connects the sub-network to the internet or other WAN/LAN.

Domain Name Server (DNS) = resolves domain names into IP addresses

Dynamic Host Control Protocol (DHCP) = every device on network must have IP address. This can be done through a static address or a dynamic one. If more than one device has same IP address, packets will get lost as it might try to deliver to both locations. Using DHCP lets one host control / manage IP distributions.

Network Address Translation (NAT) = address are translated via the router such that internal address maybe different than external address.

CNAME = Canonical Name record = a type of resource record in the DNS used to specify that a domain name uses the IP addresss of another domain, the �canonical� domain. For example �

Input / Output Operations � commonly used for benchmarking computer storage devices (hdd,sdd,san). A 7200RPM HDD has 75~100 IOPS, whereas a SDD SATA 3 Gbit can have 400 ~ 20,000 IOPS. Higher the faster.

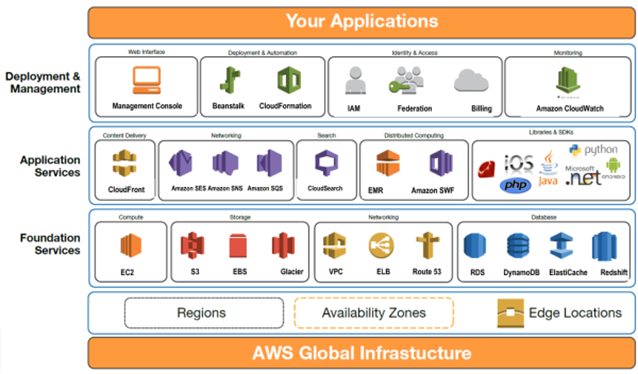

Region = separate geographical area / location. They are completely isolated from other EC2 regions. Not every region has AWS resources, for example, only following has EC2:

- US East (N. Virginia)

- US West (Oregon)

- US West (norcal)

- EU (Ireland)

- Asia Pacific (Singapore)

- Asia Pacific (Tokyo)

- Asia Pacific (Sydney)

- South America

Availability Zone = in each reach have several isolated locations

AWS Management Console

Web interface at https://console.aws.amazon.com

Command Line Interface (CLI)

Following the aws-cli.pdf document for setup and examples � need to following instructions and download and install the AWS CLI tool (MSI file). Also need to download and install Python. Need to create an access key in order for CLI tool to connect into AWS. This was created in IAM and downloaded locally. (rootkey.csv)

Software Development Kits (SDK)

Class libraries for various platforms including iOS and Android

Query API

Low level API accessed online via RESTful or SOAP

Virtual Servers in the Cloud

Features

- Virtual machines (instances) / hypervisor created from an AMI

- Pre-configured templates for instances (AMI) Amazon Machine Images

- Various config for CPU, memory, storage, networking � instance types

- Secure login using key pairs

- Instance store volumes � temporary data that�s deleted when you stop or terminate your instance

- EBS (Elastic Block Store) � persistent storage volumes for your data

- Multiple physical locations of resources � in a single region can have multiple availability zones with EC2 instances copied (at least 2 copies = 2 availability zones in a region)

- Firewall config � protocols, ports, source IP ranges and security groups

- Static IP address for dynamic cloud computing � Elastic IP addresses By default � new instances get two IP addresses: private IP and public IP which is mapped to the private via NAT. If the DNS had to always switch to new public IPs, it would take a day or more, whereas Elastic IP is instant switchover to another instance.

- Tags � metadata for EC2 resources

- Vitual networks to isolate from rest of AWS cloud � VPC (Virtual Private Cloud)

- Limit of 20 On-demand or Reserved Instances and 100 Spot Instances per region

- *For important data � replicate to S3 or EBS volumes

There are 3 parts to an EC2:

- Unit of control

Your stack (of software contained within the instance) which includes OS, web server, etc. It�s a bundle of you solution or application.

- Unit of scale

Scale out the functions by having different instances for different functions (put web server on an instance and a business logic part of application on another instance)

- Unit of resilience

As images are scaled out and replicated, obviously can recover more easily

When creating an EC2 instance, start small because it is easier to scale up. Commonly people get too large and end up downsizing to reduce costs. There are many instance types � from small micro (powerful as iPhone) to large with 244GB RAM etc.

EC2 Instance Types:

- On Demand � pay by hour (for spikes)

- Reserved � 1 to 3 year terms (reserved capacity and steady state). The hourly rate for this instance is discounted (less than the On-Demand rate).�

- Light / Medium / Heavy Utilization instances -

- Spot Instances � bidding on unused instances (for batch jobs off hours, Hadoop for data analyst)

Instances stopped don�t lose EBS volumes and can be start up again. You can also detach EBS and perform other config changes during this time. However, terminated instances loose EBS volumes and get deleted. Instance termination can be disabled as a whole.

Instances are deployed in region (geographical area) appropriate to user base or local laws. This includes Asia, Europe, Americas, etc. Also, instances come in variety of sizes with different use cases:

- Micro = for lower throughput applications � when additional compute cycles needed periodically. Available as a EBS backed instance only.

- HI1 = for random IOPS such as NoSQL databases, clustered database or OLTP systems (online transaction processing). The primary data storage is SSD volumes (instance store ephemeral) with a EBS backed root device.

- HS1 = high storage density and high sequential read/write, ideal for data warehousing, hadoop/mapreduce, parallel file systems

- GPU instances = for high parallel processing, such as scientific computing, engineering or rendering applications that leverage Compute Unified Device Architecture (CUD) or OpenCL

- C1 instances = EBS optimized � maximizes EBS storage performance

Key Pair

Used to authenticate instance access. EC2 instance stores the public key � you keep the private key. Communications with instance are secured with the key. Not all instances need a key pair.

Security Group

Used to control access, like a firewall. Contains a name, description, and ports / protocols (ex SSH, FTP, HTTP, etc). Also can setup a source IP range which is where the administrator can connect from. Setting it to 0.0.0.0 means can be connected from anywhere. Best practice is to have a specific source IP connected.

Security Groups are different between EC2 Classic vs VPC.

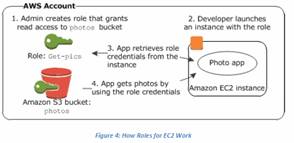

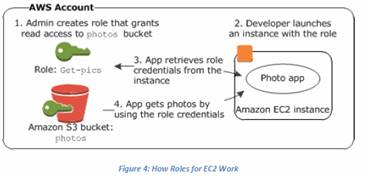

IAM Roles

Set up roles that is a pre-config of what can be accessed across all of AWS, not just EC2. Best practice is to use roles and not use access keys. This way the keys never need to be referenced anywhere (like in code). Roles are temporary access to AWS resources. It is used during EC2 instance lifespan but not exists thereafter.

User Data (Linux)

Can be file or text sent in through CLI or API it gets processed by the metadata service. It goes into to the instance and can kick off scripts (batch). For example � can send a command to install various software and run it.

EC2 Windows EC2Config Service (Windows)

Similar as user data but for Windows. If IAM Roles setup, would follow those policies (for example if role is connected to S3 or other services it would setup config for all that).

Placement Group

Logical grouping of instances within single Availability Zone to enable full bisection bandwidth and low-latency network performance for tightly coupled, node-to-node communication typical of HPC applications (High Performance Computing)

Resizing

EBS backed instances must be stopped before resizing (config changes). Resizing is done manually by the user (or via API).

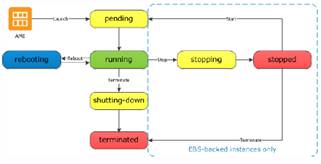

Instance Life Cycle

Starting / Stopping / Restarting Instances

EBS volumes are retained but RAM is lost. In Classic � the public / private IP are releases and new ones assigned when started, whereas in VPC � the public is only released and renewed (the private is retained). As for the Elastic IP, that is disassociated in Classic whereas in the VPC it is retained.

Termination

When an instance is being terminated � for EBS backed instances, everything except the OS volume is preserved and all EBS snapshots are preserved (in snapshots, the OS is also preserved). For S3 backed instances � all ephemeral volumes are lost.

Importing / Exporting

AMI VM can be import / export from Citrix Xen, Microsoft Hyper-V or VMware vSphere.

Monitoring and CloudWatch

Following metrics can be monitored via CloudWatch (graphs also available for each):

- CPU utilization

- Disk I/O

- Network

- Status

Various alarms and alerts can be setup

Troubleshooting

If an instance immediately terminates:

- Check volume limit

- AMI could be missing a part

- SnapShot is corrupt

- Check Console for description logs

Connection timed out

- Check security group rules

- Check CPU load on the instance

- Verify the private key file and or user name for AMI

If Instance is stuck in Stopping phase

- Create a replacement instance, kick that off and terminate the stuck one

Lost Key Pair

- First create a new Key Pair

- Stop all instances using the old one and point it to the new one

A master drive of an instance / virtual machine, in which an EC2 instance stems from. These instances can be launched in EC2 or VPC.

AMI Types:

- Amazon maintained (Ubuntu, RedHat, Windows � listed with price lowest to highest)

- Community maintained

- Your own machine image (can be private or shared with other accounts)

AMI Characteristics

- Region (Region and Availability Zones)

- OS

- Architecture (64 / 32)

- Launch Permissions = public (anyone can launch), explicit (to specific users only), implicit (for the owner only)

- Storage for root device

Bootstrapping / Bake an AMI

- Start an instance and install all the software needed. Save this off and then create new instances from this image which has become pre-installed and pre-configured.

- This should be balanced though as some configurations may still be needed after creation of instance (ex � deploy latest code). So bake as much of config as necessary and leave the rest for dynamic config post instance startup.

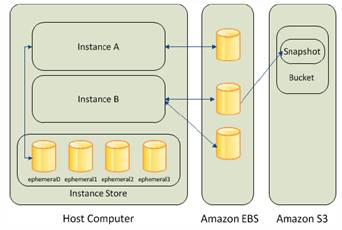

Two Storage Types for EC2

- EBS

Storage on network

- Instance Store (ephemeral drives)

Disk storage on the instance � which is different than EBS which is storage on the network. Instance stores has shorter lifecycle than EBS. If instance is lost, stopped or terminated, that storage will remove with it. Should not have database on here (that is usually on EBS or RDS).

Instance stores provide temporary block-level storage for EC2 instances. This is pre-attached/preconfigured on the same disk storage (same physical server) as the host EC2 instance. Some smaller micro instances (t1) use EBS storage only and no instance storage. Other HI1 instances may use one or more SSD-backed storage capable of 120,000 IOPS or 2.6 GB/sec of sequential read and write when using block size of 2MB.

Well suited for local temporary storage that is continually changing � such as buffers, caches, scratch data, and other temp content. Unlike EBS, instance store volumes cannot be detached or attached to another instance. Ideally suited for high performance (high I/O) workloads. Size of an instance store ranges from 150 GiB to 48TiB

EC2 local instance store are not intended to be durable disk storage. Only persists for the duration of EC2 instance. Should persist necessary data onto EBS or S3. Instances backed by EBS by default have no ephemeral volumes set for them. Also, they can only be assigned to one instance and never transferred,

The EC2 local instance storage capacity is fixed and defined by EC2 instance type. Cannot decrease/increase unless the EC2 instance instead is duplicated or removed.

Also these cannot be stopped, only set to running or terminated. Has storage capacity of 10 GiB and often now enough for Windows-type instances.

EC2, together with EBS volumes, provides ideal platform for self managed RDBMS with prebuilt, ready to use solutions such as IBM DB2, Informix, Oracle, MySQL, MS SQL, PostgreSQL, Sybase, EnterpriseDB and Vertica. Performance is based on EC2 instance (memory, size, etc). RAID striping also available to increase speed or redundancy (RAID 0 or 1). But this is like traditional datacenters so scaling is based on EC2 instance setup. Amazon RDS and DynamoDB provide automatic scaling.

Provisioned IOPS Volumes

These are designed to meet the needs of I/O intensive workloads � such as databases. They perform 4000 IOPS per volume but must required at least 100 GB size for 3000 IOPS or more. Sizes range from 10GB to 1TB. This is ideally used for database workloads. Still weaker/slower than instance store.

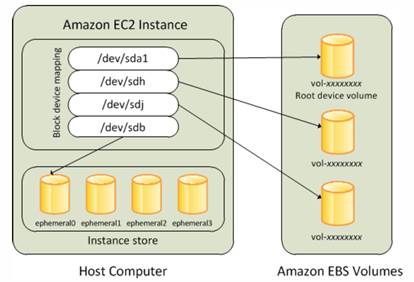

Block Device Mapping

This is a storage device that moves data in sequences of bytes or bits to support random access and use buffered I/O. This is used in hard disks, CD-ROMS, flash drives, etc.

Workload Demand

Average Queue Length = the number of pending I/O requests for a device. Optimal is to have average queue length of 1 for every 200 Provisioned IOPS.

Pre-Warming EBS volumes

When instance first created or restored, the initial I/O against that volume will be slow as the data is not yet cached. But subsequent I/O will be at optimal performance. To avoid this initial I/O charge, there is pre-warming, which does an initial I/O to get cached.

Auto Scaling automatically adds/removes EC2 instances based on triggers/alerts. There are 3 parts to auto-scaling EC2:

- Launch Configurations

Set of parameters such as instance size, security groups, etc

- Auto Scaling Group

Tells what to do once launched, such as which AZ, which load balancer to use, and most importantly � the min and max number of servers to run at any given time (cool-down period)

- Auto Scaling Policy

Set cooldown period � which is amount of time to wait when adding a new instance. Don�t want to add/remove instances constantly. Sets the min/max of capacities and can be triggered by CloudWatch events.

Auto Scaling charges by hour with one hour up front when started. Scaling takes time � it is a polling system so it works off time intervals, also there is boot time to account for, and ELB needs a few cycles to start calling on the new instance.

CloudFormation is used to setup the configuration for the auto scaling.

CloudWatch can be used to trigger/alarm the auto scaling. For example, when CPU utilization is greater than 50% for more than 5 minutes, scale up and when CPU is less than 30% for more than 5 minutes, scale back down. But CloudWatch could also just send a message (SNS) when these conditions are met instead of actually kicking off the auto scaling. This way the auto scaling is done manually by the admin when reading these messages.

Limits for auto-scaling:

- 20 EC2 instances

- 100 spot instances

- 5000 EBS volumes or an aggregate size of 20TB (Total)

- These max instances are for state of pending, running, shutting down or stopping only. There is another limit of 4x the max instances for total number of instances in any state.

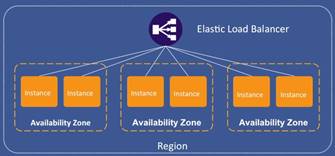

ELB does 3 things:

- Spread

Balance resources across availability zone

- Offload

Remove load from EC2 instance

- Health check

Monitor and setup alerts on whole layers � can take out whole sections of architecture (wherever egress points are)

Best Practices:

- Persistent HTTP connections

- Don�t use underlying IP address, just use DNS names

Setup is done through the EC2 console. Select the PING protocol/port/path (where to monitor the traffic � which can be the index.html page or just root directory). The options for load balancer are:

- Response Timeout = time to wait when receiving a response from health check

- Health Check Interval = amount of time between health checks

- Unhealthy Threshold = number of consecutive health check failures before declaring an instance unhealthy

- Healthy Threshold = number of consecutive health check successes before declaring an instance healthy

Example here (this is for the two Linux instances in Lab examples)

http://LinuxLoadBalancer-1936685151.us-west-2.elb.amazonaws.com

Following is from LAB document example

Create 2 Linux instances using a bootstrap script given by Amazon for this demo:

#!/bin/sh

curl -L http://bootstrapping-assets.s3.amazonaws.com/bootstrap-elb.sh | sh

Two Linux instances were created with Apace and PHP pre-installed and a default index.php page. To connect into these instances, use the original key pair (downloaded locally).

http://ec2-54-200-250-195.us-west-2.compute.amazonaws.com/

http://ec2-54-201-108-203.us-west-2.compute.amazonaws.com/

Setup the ELB instance and point it to the two instances. ELB site is here:

http://labelb-1827406090.us-west-2.elb.amazonaws.com/

Setup the Auto Scaling Group

http://ec2-54-201-210-96.us-west-2.compute.amazonaws.com/

Terminated this instance and the auto scale automatically created a new one:

http://ec2-54-200-154-184.us-west-2.compute.amazonaws.com/

Create an SNS Topic � AutoScaling � and set the receipient email address. Then on the Auto Scaling Group � set notifications to this topic (this can be done through AWS CLI or Console).

Create Scaling Policies � one for scaling up (Adds Instance) and another for scaling down (Removes Instance). This also automatically sets alarms in CloudWatch. Note that for this LAB, we are not using ELB with Auto Scaling. Ideally, you would be using both.

Creating a Windows instance � no user data needed and instead uses EC2Config Service (read above)

When first logging in, have to use the key access file to decrypt the Administrator�s password.

Administrator / LQUu*%KuQHT

*The private key file is in the same AWS wiki folder as this study guide

http://ec2-54-201-201-92.us-west-2.compute.amazonaws.com

Elastic IP created and set to the windows instance here:

To connect into EC2 instance using Putty, reference the end of this Lab guide or this:

http://docs.aws.amazon.com/AWSEC2/latest/UserGuide/putty.html

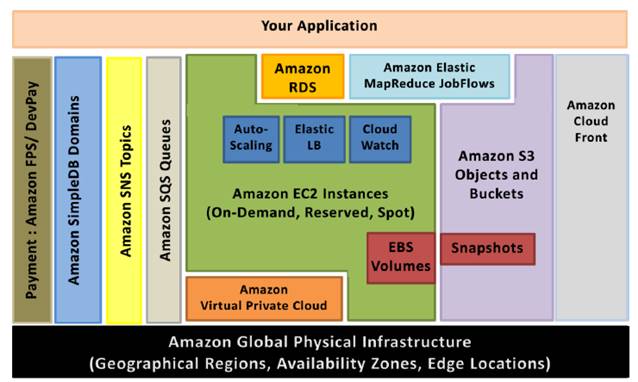

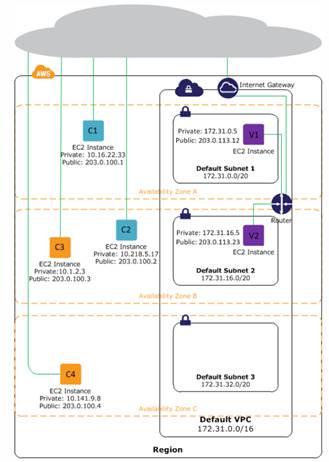

VPC is a network layer specifically for EC2. Instances get deployed into the VPC and user may select IP ranges, subnets, configure route tables, network gateways and security settings. There is a default VPC that every instance gets launched into automatically. Instances must use Internet Gateway to access internet, which is automatically provided in default VPC. Instances deployed in non-standard subnets do not get Public IP so it must have Internet Gateway and an Elastic IP to have internet access.

Subnets are used to group instances. Instances can only view other instances in its own subnet. Private Subnets can have a NAT instance in the public subnet (with an EIP) that the private instances can connect to for internet access (for example to get updates). Subnets with Internet Gateways are considered Public Subnet, whereas subnets without are considered Private Subnet.

In EC2, there are some benefits in using VPC over Classic: (default VPC vs static)

- Assign static private IP to instances that persist across starts and stops (never looses IP)

- Assign multiple IP to instances

- Define network interfaces, and attach one or more interface to instance

- Change security group membership for your instance

- Control the outbound/inbound traffic from instance (ingress / egress filtering)

- Network Access Control List (ACL)

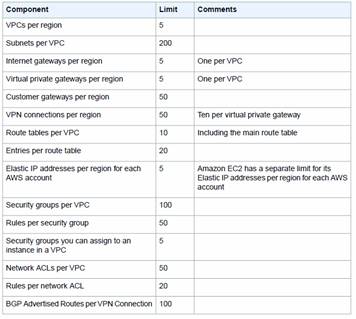

Some capabilities

- User-defined address pace up to 65k+

- Up to 200 user defined subnets (setup virtual routing, DHCP servers, NAT instances, Internet gateways, ACLs)

- Private IPs stable once assigned

- Elastic Network Interfaces (ENI)

- VPC can span multiple AZ although subnets must remain in single AZ

-

Within VPC can run dedicated instances (not shared with any other customers). Today � VPC is enable as default for EC2 instances. EC2-Classic instances has private IP from share private IP address range (within AWS). Each instance also ahs public IP address from Amazon�s IP pool. With EC2-VPC, each instance gets private IP from the VPC�s private IP range. There is no public IP by default, unless set by the user via Elastic IP. But this will always first go through the VPC�s gateway at the network edge.

Dynamic Host Configuration Protocol (DHCP)

To setup your own domain names, create a new DHCP setting in VPC. Can have up to 4 separate DNS servers defined.

Amazon DNS

By default, all instances in default VPC get DNS hostnames (name = AmazonProvidedDNS). If this is disable, those instances would not be accessible from internet.

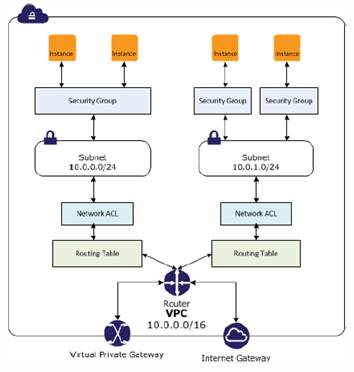

VPC has two features for security:

- Security Groups = like a firewall for EC2 instances controlling inbound and outbound traffic at instance level. These groups are specific to the VPC only.

- Network Access Control List (ACLs) = like a firewall for associated subnets, controlling inbound and outbound traffic at subnet level. These lists are specific to the VPC only.

Each EC2 instance requires 1 or more security groups (if not set, will default to default SG). The Network ACL is optional (additional) level of security that can be added on top of this. Use the AWS IAM to manage which users have control over modifying these policies.

Some differences between Security Groups and Access Control Lists (ACL)

- SG is at instance level, ACL is at subnet level

- SG has allow rules only, ACL can have allow rules and deny rules

- SG is stateful (return traffic automatically shown), ACL is stateless (return traffic must explistly allowed)

- SG evaluates all rules before allowing traffic, ACL process rules in order before allowing

Recommendations for ACL � Best Practice

Have a single subnet that can receive and sent to internet (like a DMZ), then setup ACL as following for inbound & outbound:

- Rule 100 = TCP 80 allow (http)

- Rule 110 = TCP 443 allow (https)

- Rule 120 = TCP 22 allow (SSH)

- Rule 130 = TCP 3389 allow (rdp)

- Rule * = all / all deny (blocks everything else except above)

ENI is a virtual network interface that attaches to EC2 instances with following attributes (only available in VPC), these attributes follow the network interface not the instance so it can be moved around to other interfaces easily

- Primary private IP address

- One or more secondary private IP addresses

- One Elastic IP per private IP address

- A MAC address

- One or more security groups

- Source / destination check flag

- A network interface that can attach to an instance, then detach it and re-attach it to another instance.

Attaching multiple ENI to an instance is useful when:

- Create a management network

- Use network and security applicances in your VPC

- Create dual-homed instances with workloads on distinct subnets

There are 4 VPC config types already template in AWS Console:

- Single public subnet with internet access

- Public and private subnets � the private subnet can only access via NAT through the public subnet

- Public and private subnets with VPN � same as above except there is a VPN connection available to private subnet

- Private subnet with VPN only

This lab will create 1 VPC with 1 subnet and then add a 2nd subnet to it. One will be public while the other is private.

Dedicated Network Connection to AWS

- Dedicated bandwidth to AWS in 1Gbps or 10Gbps

- Full access to public endpoints, EC2, S3 and VPCs (VLAN tagging maps to public side or VPC)

Requirements for Direct Connection are:

- Collocated in existing Direct Connect location

- Service provider is member of AWS Partner Network (ARN)

- Single mode fiber, 1000BASE-LX � for 1gb / 10gb connections �

A request has to be submitted to implement Direct Connect

Scalable Domain Name Web Service

Latency-based Routing (LBR) = application is in different Amazon EC2 regions which has LBR records for each location (with geo information). Route 53 will route end-users to the endpoint that provides the lowest latency.

DNS domain name cannot exceed 255 bytes including the dots of any ASCII characters (though some requires escape characters for usage), Route 53 supports any valid domain name.

When creating a hosted zone, Route53 automatically creates 4 Name Server (NS) records and a Start of Authority (SOA) records.

A Format

An A record value must be of IPv4 format: 192.0.2.1

AAAA Format

An AAAA record value must be of IPv6 format in colon-separated hexadecimal format: 2001:0bd8:85a3:0:0:8a2e:0370:7334

CNAME Format

Any subdomain that is not using the zone apex � example.com is a zone apex but www.example.com are CNAME

MX Format (Mail Host)

NS Format (Name Server)

Route53 name servers look like this:

- Ns-2048.awsdns-64.com

- Ns-2049.awsdns-65.net

- Ns-2050.awsdns-66.org

-

PTR Format

SOA Format (Start of Authority)

SOA record identifies the base DNS info about the domain:

Ns-2048.awsdns-64.net. hostmaster.example.com. 1 7200 900 1209600 86400

SPF Format

SRV Format (Space-Separated Values)

TXT Format

Route CloudFront URLs via Route53

Route Elastic Load Balancing (ELB) via Route 53

Route to EC2 instance through an Elastic IP (EIP) via Route 53

Route to S3 object or RDS

Name Servers = Links your Registrar with Hosting Service Provider (like a main DNS)

Start of Authority =

Hosted Zone

Collection of resource record sets for a specified domain. (ie example.com) � tells DNS how to route traffic for that domain

Weighted Resource Record

This is to help Route 53 determine which record set to select from when given a domain name. Each resource record is weighted and divided by the total number of weights. This percentage gives the probability of being selected.

Alias Resource Record

Alias Resource record set contains a pointer to a CloudFront, an Elastic Load Balancer, an S3 bucket that is static web content, or another Route 53 resource record in same hosted zone.

AWS Storage Options:

Scalable Storage in the Cloud

S3 is storage for the internet accessible from EC2 or anywhere on web. Supports encryption and virtually unlimited amount (unlimited number of buckets with unlimited objects in them, but each object limited to 5TB). Each object has unique developer-assigned key. Accessed through REST API (Java, .Net, PHP, Ruby), AWS CLI (command line) and web.

Setup auto archive to Glacier by using the Life Cycles option. Typically used for static content and as an origin for CDN (CloudFront). Examples usage are photos, videos, large-scale analytics (financial transactions), critical data, disaster recovery. S3 is redundant and versioning control capable. Often used inline with database (dynamoDB) where db has metadata (object name) that is referenced to S3.

S3 is 99.99999999% (11) durability per object and 99.99% availability (1 year). Reduced Redundancy Storage (RRS) is S3 option that has lower durability for lower cost (99.99% durability). This is a cost-effective yet highly available solution. Great for anything that can be easily reproduced (like thumbnails or transcoded media).

Multi-Factor Authentication (MFA) � requires two forms of authentication to delete � AWS account credentials plus six digit token code. Accounts can be created that are used for roles and authorization. These accounts can be geographical, allowing users of particular region to only access that region�s S3 buckets. These accounts fall under Bucket policies, which apply to all objects. Specific object authorization can be setup through permissions (object level). Policies can be controlled with IAM.

Some Anti-Patterns:

- S3 is not a file system, not POSIX-compliant

- S3 is not queryable, must use bucket name and key to retrieve

- S3 has high read / write latencies, so not intended for dynamic or rapidly changing data

- For long term storage (archive) where not accessed often, it is more cost effective to use Amazon Glacier

- No dynamic website hosting (but good for static website hosting)

Every object is contained in a bucket and single AWS account can have up to 100 buckets. Bucket example, object photos/puppy.jpg is in the example bucket but the URL could be:

http://example.s3.amazonaws.com/photos/puppy.jpg. Buckets also serve to organize, identify, access control of objects as well as aggregation for usage reporting. Every bucket has a key � unique identifier. In URL above, example is the bucket and �photos/puppy.jpg� is the key.

The stored data are called objects in S3. Objects consist of the actual data plus metadata (date, version, etc). There is unlimited storage for objects. You cannot create a bucket inside another bucket. Bucket ownership cannot be transferred.

Regions:

|

US Standard |

US West (Oregon) |

US West (North Califronia) |

|

EU (Ireland) |

Asia Pacific (Singapore) |

Asia Pacific (Syndney) |

|

Asia Pacific (Tokyo) |

South America (Sao Paulo) |

|

Objects stored in a region will never leave that region � though it may be replicated into other regions. In each region the objects are replicated across multiple servers. This may take some time. For example, �when an object is created, modified or deleted the object might not appear, might not exist, return prior data or return deleted data until the change is fully propagated. S3 does not support object locking. If two object received simultaneously, it will store the one with the latest timestamp.

Bucket Policies (Access control)

Bucket policies provide centralized, acess control to buckets and objects based on variety of conditions, including S3 operations, requesters, resources and aspects of the request (eg IP address). For example, account policy could control access to particular S3 bucket, origin (such as from corporate network), business hours and from a custom application (by user agent string). Only bucket owner can set policies for that bucket.

Also can use Identity Access Management (IAM) to create users under single AWS account with different levels of access, which is controlled by their own set of access keys (every IAM could have different keys).

Operations

- Create a bucket

- Write an Object

- Read object

- Delete object

- Listing keys � list of contents

Bucket Names

There is direct mapping between S3 buckets and subdomains. Objects are access by the REST API under bucketname.s3.amazonaws.com.

Versioning

Versioning allows you to preserve, retrieve, and restore every version of every object stored in this bucket. This provides an additional level of protection by providing a means of recovery for accidental overwrites or deletions. Once enabled, Versioning cannot be disabled and you will not be able to add Lifecycle Rules for this bucket.

Alerts and Error Handling

S3 buckets can setup Simple Notification Service (SNS) on an event key. Due to distributed nature of S3, requests can be temporarily routed to the wrong facility � especially immediately after buckets are created or deleted.

Following buckets created with URL

Sfishs3bucket01 (private, RRS, USRegion )

https://s3.amazonaws.com/sfishs3bucket01/images/20130609_091627.jpg

Sfishs3bucket02 (public, logging, versioning*, northern California)

https://s3-us-west-1.amazonaws.com/sfishs3bucket02/images/20130609_091949.jpg

- File modified � view version

Sfishs3bucket03 (static site, life cycle, USRegion, lifecycles, Glacier Archive* )

https://s3.amazonaws.com/sfishs3bucket03/index.html

*Buckets that are under version control cannot be set with Life Cycle Rule (archiving)

Archive

Storage in the Cloud

Some quick facts

- Extremely cheap archive cloud storage ($0.01 / GB / month).

- Retrieve data in 3 � 5 hours

- Use data lifecycle policies to move data from S3 to Glacier. Can also directly import/export.

- Annual durability is 99.99999999% (11) per year.

- Performs regular systematic data integrity checks and auto self-healing.

- A single archive is 4TB but no limit to total amount for overall service. Scales up and down.

- 2 ways to interface

o REST web services (java or .Net SDKs available too). Can setup jobs and send notifications through SNS.

o Object lifecycle management via S3 (auto policy driven). Check S3 guide for details.

- Obviously, since Glacier is archive service, we have following 2 anti-patterns:

o Rapid data changes

o Real time access

Vaults

- Controls various archives

- Controls access

- Send notifications to SNS

Store objects to Glacier from S3

Life Cycle Rules

- Setup in S3 � create date start and expire time

- To restore � done in S3 as well

Provides durable block-level storage for EC2 instances (VM) which are NAS an persisted independently � physical hard drive, can be formatted with file system of your choice and interfaced by the instance OS selected.

Some quick facts:

- EBS becomes a boot drive for EC2

- EBS volume sizes range from 1GB to 1TB.

- Ideally used as primary storage for database or file system, or for any application requiring block-level storage.

- EBS has high and consistent rate of disk reads and writes.

- Two types of volume types:

o Standard volumes � for boot drives that go about 100 IOPS and supports burst capability

o Provisioned IOPS volumes � for high performance and intensive IO, such as databases. Supports 2000 IOPS but can be striped to delivery thousands per EC2 instance

- Since EBS is NAS (network storage), the performance can be impacted by the network IO. EC2 offers instance types where EBS optimized for dedicated connection speed of 500 MBps or 1GBps. Optimized settings deliver 10% of Provisioned IOPS performance 99.9% of the time.

- Multiple EBS can be attached to EC2 instance. Can also do RAID 0 or logical volume manager software to aggregate available IOPS, total volume throughput and total volume size.

- Backup (snap shots) are persisted on S3, and incremental � only containing most recent changes since last snapshot; EBS volumes of 20GB or less of modified data since snapshot can expect annual failure rate (AFR) of 0.1% to 0.5%. Larger volumes should expect higher AFR values, proportionally.

- If EBS volume fails, volume recreated from all prior snapshots. But EBS volume is dependent on Availability Zone, so if zone itself goes down, then that volume does too. But snapshots are persisted into all zones in a region.

- Cost of EBS is in three components

o Provision storage (the volume)

o I/O requests (frequency)

o Snapshot storage (backup)

- EBS volumes cannot be resized. If resizing is needed, two options:

o Attach a new volume and use together with existing one

o Snapshot original volume, remove that volume, create new desired volume, restore from snapshot

- Interface available in SOAP and REST services. This is to create, delete, describe attach and detach EBS volumes from EC2 instances or to create, delete and describe snapshots from EBS to S3

- No Interface available for the data itself. Just appears as drive under EC2.

- Anti-Patterns:

o Temporary Storage � consider using SQS, ElastiCache

o Highly-durable storage � consider using S3 or Glacier

o Static data or web content � consider using S3

Move large amounts of data in/out of AWS EBS snapshots, S3 buckets and Glacier vaults using portable storage devices for transport (bypasses internet using internal network). Typically used for data that would take a week or more to transfer over the internet. Examples:

- Initial data upload to AWS

- Content distribution or data interchange to/from customers

- Offsite backup / archive

- Disaster recovery

Typically rates at 100 MBps but bounded by combination of read/write of portable storage device.

Integrates On-Premises IT Environments with Cloud Storage. Ideally for backups to S3, disaster recovery and data mirroring to cloud-based compute resources.

AWS Storage Gateway software appliance is downloaded as a VM image to local datacenter. From there, this will connect to local iSCSI devices. Having this retains some portion of data locally (like cache data) up to 32TB sizes (per each volume). This will be connected back to S3 in the backed and asynchronously backing up to cloud.

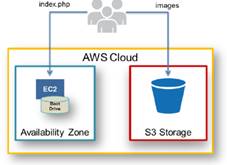

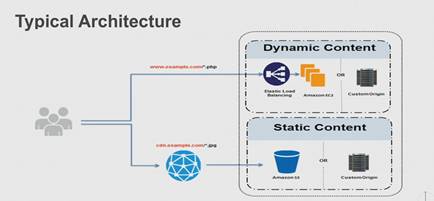

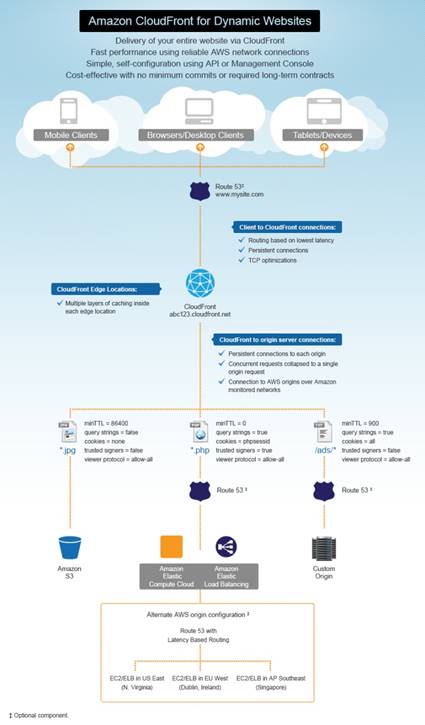

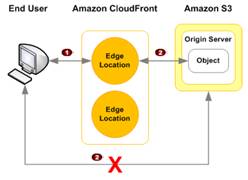

CloudFront is a CDN web service that integrates with AWS. Requests for content are automatically routed to nearest edge location (high performance). User requests are invisibly redirected to a copy of file at nearest edge location. Content is organized into distributions and has a unique CloudFront.net domain name (abc123.cloudfront.net). Distributions can download your content (HTTP/HTTPS) or stream them (RTMP).

http://aws.amazon.com/cloudfront/

CloudFront serves static content, not dynamic. Dynamic content comes from EC2. Difficult to cache dynamic content, so that is usually not in CloudFront. But cache as much as you can. Query strings can be cached (/api/GetBooks?cat=math).

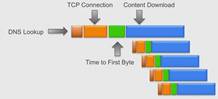

Each second of download/load time on webpage is costly. Typical page load looks

like (multiple lines represent various page content which can come from various

origins):

�

�

How can

dynamic content be optimized?

Reduce TCP Connection time � Keep-Alive Connections & SSL Termination

Reduce First Byte time � Keep-Alive Connections

Reduce Content Download time � TCP/IP optimizations (also Route 53)

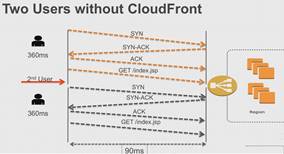

HTTP runs on TCP/IP and requires handshakes (syn, syn-awk, awk). Example without CloudFront:

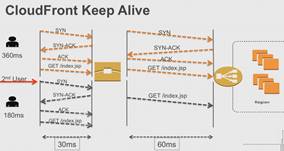

Keep-Alive connections uses the initial SYN/SYN-ACK/ACK for all connections and requests thereafter just comes thru.

Keep-Alive connections work best when there are multiple users / many requests. For SSL, there are even more TCP handshakes so Keep-Alive reduces time even more in SSL. This is CloudFront SSL Termination � can be Half Bridge or Full Bridge.

This is used to reduce content download. Slow-Start used to build up to the packet transfer. Doesn�t request next set until ACK received. CloudFront Slow-Start optimization works because this Slow-Start is only done for the initial connection / user, any thereafter it just sends the full 4 packets (then more after ACK).

CloudFront is a web service focused on improving performance (speed) via distribution. It retrieves content from edge location (data center) or custom source (your server) with the lowest latency. If already in an edge location, retrieves content instantly.

Example: A regular jpg image from some site may take 10 hops (tracert) to get to end user via several states (possibly even further from source). CloudFront eases this by reducing the number of hops.

The Origin Server can be of two types:

- HTTP/HTTPS = Amazon S3 bucket, Elastic Compute Cloud (EC2) or own web server

- RTMP = Adobe Media Server which must always be Amazon S3 bucket (uses Adobe Flash media Server over port 1935 and port 80)

Content / data served are called objects and access can be controlled via CloudFront URLs. CloudFront sends config info (not content) to edge locations � which caches copies of your objects. Can customize this via headers to have expiration times (remove cache from edge locations). Defaults to 24 hours but can be set from 0 � infinite.

CloudFront URLs in form of:

http://abc123.cloudfront.net/mydatacontent.jpg

DNS handles user requests and routes to lowest latency CloudFront edge location. There, CloudFront checks cache, if not found, goes to origin to grab it. Instantly returns content while caching. After 24 hours (or config set in header), CloudFront compares cache version against origin to determine latest version. Updates cache as necessary. Request params are part of cache, for example, following are all cached separately, although it may all return same content:

http://abc.example.com/images/a.jpg?p=1

http://abc.example.com/images/a.jpg?p=2

http://abc.example.com/images/a.jpg?p=3

CloudFront doesn�t retrieve object until user requests for it. When objects updated, it should have new name (*_v2) otherwise you will also have to wait for the original object to expire before retrieving the update (defaults to 24h). If an object is not requested often, CloudFront may evict it to make space for more demanded objects.

Users can also modify content in CloudFront via HTTP DELETE, OPTIONS, PATCH, POST and PUT. CloudFront is cheaper to deliver content than from S3 (less hops too so faster).

To route using CNAME or domain name (instead of the abc.cloudfront.net), setup in DNS. This can also be done in Route 53 if applicable.

Can control private content access by:

- Restrict access to objects in CloudFront edge caches by using signed URLs (pub/priv keys)

- Restrict access to S3 such that all requests must go through CloudFront. Then create special user called Origin Access Identity, which has access to read the bucket and no one else can. Remove all other access permissions in S3.

-

- For HTTP servers, this is not possible since the content has to be public for CloudFront to access it. So the content is not as well controlled as S3.

If loading content from own domain and it is SSL (HTTPS), must load that SSL certificate to AWS Identity and Access Management (IAM) certificate store. Then add the domain to CloudFront distribution store and let it pickup the content. (certs must be X.509 PEM format)

Create S3 bucket � sfishs3bucket01

URL to content:

S3 = https://s3.amazonaws.com/sfishs3bucket01/images/20130609_091627.jpg (US National)

https://s3-us-west-1.amazonaws.com/sfishs3bucket02/images/20130609_091627.jpg (Norcal)

CloudFront = https://d1gh6jsmdgiqiz.cloudfront.net/images/20130609_091627.jpg

CNAME can be used in CloudFront (alias � domain names that point to another domain name, but not an IP. Example: public.example.com � www.example.com)

Follow the CloudFront wizard to create the new cloudfront for an S3 bucket. Wait for CloudFront status to be �Deployed� and then test it by access via CloudFront URL.

S3 = https://s3.amazonaws.com/sfishs3bucket01/web/index.html

CloudFront = https://d1gh6jsmdgiqiz.cloudfront.net/web/index.html

Create Origin Access Identity � In CloudFront dashboard (create/edit) select �Yes� for �Restrict Bucket Access�. This will open up options for �Origin Access Identity� (create / use existing). You can use a single identity for multiple distributions to various buckets. The limit is 100 total identities, but really you should only need to use one for your application.

Using signed URLs � create trusted signer (via User � Security Credentials). Goto cloudFront Key Pairs and create new key pair. Download the private and public keys.

Log Diagram

Some basic CloudFront troubleshooting (on top of logs) when user cannot view files on distribution:

- Make sure account is signed up for both CloudFront and S3

- Check object permissions on S3

- Check CNAME and make sure its pointing to correct location

- Check URL

- Are you using a custom origin for content? If so, check there

ERROR: Certificate xxx is being used by CloudFront

When trying to delete an SSL cert from the IAM certificate store, you get this error.

- CloudFront distribution must be associated either with default certificate or a custom SSL one. Make sure to rotate distribution to another certificate OR revert from using the custom one back to the default one.

Managed Relational Database Service provides capabilities of MySQL, Oracle, or MS SQL Server as a managed, cloud-based service. Eliminates administrative overhead associated with launching, managing and scaling own relational DB on EC2 or another computing environment. Backups are automatically done nightly � but DB snapshots can also be initiated by user. Interface into the database is direct (like any other db) via the database server�s address. For example � original connection string of dbserver.example.com is replaced with dbserver.c0caffpest.us-east1.rds.amazonaws.com. RDS can use IAM and VPC for security

RDS configurations can range from 64-bit 1.7GB RAM with single EC2 compute unit (ECU) up to a 64-bit 68GB RAM with 26 ECUs. IOPS range from 1,000 � 30,000.

To increase I/O capacity do any of following:

- Migrate DB instance to high I/O capacity

- Convert from standard storage to Provisioned IOPS storage

- If already Provisioned IOPS, provision additional throughput capacity

Use of IAM to control DB administration, such as � create, modify or delete RDS resources, security groups, option groups or parameter groups; also remember to rotate credentials often.

RDS Multi-AZ

Synchronously replicates data between primary RDS DB instance with a standby instance in another availability zone. Automatically turns over the to the standby instance on failure. (takes 3 minutes)

RDS vs Database on EC2

EC2 databases are ideal for application require more control not supported by RDS, ie max level admin control and configurability (like controlling it from the OS level). But in EC2, backups / snapshots need to be manually done or setup a job for. In RDS, its automatically controlled. If using MySQL, Oracle, ProtgreSQL or MSSQL, RDS is really the better way to go (patching, auto backup, Provisioned IOPS, replication, easier scaling)

RDS Components

- DB Instances (MySQL, PostgreSQL, Oracle and MS SQL) size ranges from 5GB to 3TB

- Regions and Availability Zones (AZ)

o Create in one region but can use multiple AZ for performance and redundancy / failover support.

- Security Groups (firewall filter)

o DB security group controls access to DB that is not in a VPC

o VPC security group controls access inside VPC

o EC2 security group controls access to EC2

- DB Parameter Groups

o Contains engine config values that can be applied to one or more DB instances

o This is specific to database engine and version

- DB Option Groups

o For various tools based on the DB engine (currently only available for Oracle, MySQL and MSSQL)

o The tools vary based on the DB being used (Oracle has most tools)

- Reserved Instances

o A one-time up front cost reserves an instance for a one or 3 year term at much lower rates

o Available in 3 variants: Heavy, Medium and Light Utilization

-

Terminology and Concepts

CIDR = Classless Inter-Domain Routing, allocates IP and routing IP packets

DB Instance = the database server in cloud (RDS does not support direct host access, must remote); can have up to 40 RDS DB instances, 10 can be Oracle / MS SQL

DB Instance Class = memory capacity of DB (t1.micro, m1.small, m1.medium �)

RDS Storage = some factors that can affect performance are

- DB snapshot creation

- Nightly backups

- Multi-AZ creations

- Read replica

- Scaling Storage

Regions and AZ = any RDS instance initiated is for that region only. When using multi-AZ deployment, there is no standby instance.

Maintenance = controlled by the user, use multi-AZ to minimize disruptions

VPC = all RDS instances should be in a VPC (if not at least the default which is now mandatory), and should have at least two AZ in the region;

Instance Replication = done through AZ and automatically changes instances when failure. For MySQL, uses the replica feature built in MySQL. The standby instance is not in the same AZ as the running instance.

Events = tracks all activity in RDS � this is a �DescribeEvent� action

SOAP = only available through HTTPS

MySQL

MyISAM does not support crash recovery

Federated Storage Engine is not supported

Oracle

Two types of licensing � BYOL (bring your own, contact Oracle for support) or License Included (where AWS owns the license and no additional needed, contact AWS for support)

The Database Diagnostic Pack and Database Tuning Pack are only available on Enterprise Edition

MSSQL

No support for increasing storage (due to Windows Server lack of striped storage extensibility), therefore initial creation should anticipate future growth accordingly

Max number of db is 30, on MS Server Edition � 1024 GB max storage, for MS Express Edition � 10 GB

PostgreSQL

Minor version upgrades will be automatically performed by AWS RDS, based on user�s availability window.

Making Change to DB Instance

When renaming an instance � automatically removes old DNS name, also replicas name stay attached and unchanged, metrics follow names so it will start over unless another instance uses the old name again

Created MySQL DBinstance using the Database Security Group (allows 3306 inbound/outbound); to connect run following:

$ mysql -h labmysql.c9buqst6tnbq.us-west-2.rds.amazonaws.com -P 3306 -u dba -p -b -v

Enter password: **********

Welcome to the MySQL monitor.� Commands end with ; or \g.

Your MySQL connection id is 25

Server version: 5.6.13-log MySQL Community Server (GPL)

Copyright (c) 2000, 2011, Oracle and/or its affiliates. All rights reserved.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> show databases;

--------------

show databases

--------------

+--------------------+

| Database���������� |

+--------------------+

| information_schema |

| DBLab1��� ���������|

| innodb������������ |

| mysql������������� |

| performance_schema |

+--------------------+

5 rows in set (0.05 sec)

mysql> use DBLab1

Database changed

Now can create and modify tables.

mysql> select * from LabTable1;

--------------

select * from LabTable1

--------------

+----+------------+

| id | data������ |

+----+------------+

|� 1 | L1 RECORD1 |

|� 2 | L1 RECORD2 |

|� 3 | L1 RECORD3 |

|� 4 | L1 RECORD4 |

|� 5 | L1 RECORD5 |

+----+------------+

5 rows in set (0.04 sec)

mysql> select * from LabTable2;

--------------

select * from LabTable2

--------------

+----+------------+

| id | data������ |

+----+------------+

|� 1 | L2 RECORD1 |

|� 2 | L2 RECORD2 |

|� 3 | L2 RECORD3 |

+----+------------+

3 rows in set (0.04 sec)

mysql> select * from LabTable3

��� -> ;

--------------

select * from LabTable3

--------------

+----+------------+

| id | data������ |

+----+------------+

|� 1 | L3 RECORD1 |

|� 2 | L3 RECORD2 |

+----+------------+

2 rows in set (0.04 sec)

Predictable and Scalable NoSQL Data Store that stores structured data in tables, indexed by primary key, and allows low-latency read and write access to items ranging from 1 byte � 64 kb (cell in table value).

Some facts:

- No fixed schema, so each data item can have different attributes

- Primary key can be either single-attribute hash key or a composite hash-key

- Supports three data types: number, string and binary (scalar and multi-valued sets).

- Automatically replicated to 3 different availability zones in region.

- Quick performance with the use of SSD and limiting indexing on attributes.

Data Model

- Tables, Items and Attributes

o Database is collection of tables, where table is collection of items and each item is collection of attributes

o Tables are schema-less, just collection of various items

o Item has attributes that must be name value pair and less than 64 KB

o No NULL or Empty string attributes are allowed

- Operations

o Table � create update delete

o Item � add, update, delete item

o Query and Scan

o Data Read

Managed In-Memory Cache service using either Memcached or Redis (runs in VPC):

- Memchached � memory object caching system for code / applications

- Redis � in-memory key-value store that supports data structures such as sorted sets and lists. Often used as in-memory NoSQL database

Data Model

- Cache Nodes = smallest block of memory deployment

- Cache Cluster = group of cache nodes

- Cache Parameter Groups = parameters to manage runtime settings (used during startup)

- Replication Groups = duplicates / replicas on more clusters to avoid data loss

- Security = controlled through the subnet group in VPC

By default, cache clusters are not redundant and standalone. If using Redis, can create replication group to enhance scalability and avoid data loss. (only for Redis, not Memcached)

Managed Petabyte-Scalable Data Warehouse service optimized for datasets ranging from gigabytes up through petabytes or more. Some common use cases are:

- Analyze global sales data

- Store historical stock trade data

- Analyze ad impressions

- Aggregate gaming data

- Analyze social trends

- Measure clinical quality, operation efficiency and financial performance in health care

Nodes can be a few GB up through PB, controlled through the AWS console or APIs. Costs less than $1000 per TB per year.

tbd

When initial AWS account creation � that root account has unlimited access to everything. After signing in, should setup IAM. Permissions are defined in policies. AWS provides policy templates that you can use. Signing up for an EC2 automatically signs you up for S3 and VPC. Watch video on IAM help page (best practices)

https://573575957043.signin.aws.amazon.com/console

Created an account alias:

https://solidfish.signin.aws.amazon.com/console

There is an IAM policy simulator.

Groups are used to manage permissions. Create groups using permissions policy templates. Some example templates are:

- Administrator � full access to AWS services and resources

- Power user

- Read only

- CloudFormation Read Only Access

- CloudFront Full

- CloudFront Read only

- �

Best practice is to create unique users for all � keeps it granular and divided, better control. Passwords can be manually created or auto generated. Passwords are required if user will access the AWS Management Console (otherwise user accounts are used in the API via access keys).

Access Keys

Used to make secure REST or Query protocol request to any AWS service API

MFA � Multi-Factor Authentication

Requires one more setup for user authentication (on top of password). This can be virtual (use of QR codes using smart phone) or hardware (token). This is best for privileged users.

Signing Certificates

Use of X.509 certificates for scure access � used with SOAP and third-party tool such as OpenSSL to create the certificate. Can use RSA key 1024 or 2048 bit length. Once certificate created (and self signed), upload it to the User account in IAM.

Easy management of access keys on EC2 instances with automatic key rotation, assign least privilege to the application and SDK fully integrated. It is like delegating access. Some benefits are:

- No need to share security credentials

- Easy to break sharing relationship

- Great for cross-account access, intra-account delegation and federation

There are 3 role types and access:

Service Roles

- EC2

- CloudHSM

- Data Pipeline

- EC2 role for data pipeline

- Elastic Transcoder

- OpsWork

Cross Account Access

- Access between AWS accounts owned � allowing IAM users from another AWS account to access this account

- IAM user access from third party AWS account holders � allowing access from third party AWS users

Identity Provider Access

- Access to web identity providers � facebook, google, etc

- WebSSO � Web Single Sign On� - allows SAML provider access

- API access for SAML provider � SAML provider to access AWS via CLI or API

SAML 2.0 providers (3rd party) for IdP (Identity Provider)

For example � using your company�s authentication system

SAML = Security Assertion Markup Language

Set password length and following policies:

- Require at least one uppercase

- Require at least one lowercase

- Require at least one number

- Require at least one non-alphanumeric

- Allow user to change password

User Activity and Change Tracking

Collects and reports metric on your AWS resources. Can set alarms on these metrics to trigger actions.

There are two parts to CloudWatch:

- Alarms

- Metrics (on any AWS resource)

o DynamicDB

o EBS

o EC2

o ELB

� HealthyHostCount

o ElastiCache

o ElaticMapReduce

o RDS

o SNS

o SQS

o StorageGateway

Automatically creates, deploys and manages the IT infrastructure needed to run a custom application.

Upload existing application and it will auto deploy into AWS with load balancing and everything

Supports Java, Node.js, PHP, phthon, Ruby, .Net

Stacks supported are:

- Tomcat Java

- Apache PHP

- Apache Phython

- Apache Node.js

- Passenger Ruby

- IIS7.5 .Net

Supports multiple versions of same application (ex PROD, DEV, TEST etc); supports up to 25 apps and 500 app versions

Supports application deployment via Git

Automatically deploys IT infrastructure on AWS using templates (stack) � using a Template, Parameters, Mappings, Conditions, Psuedo Parameters, Resources, Resource Properties, References, Intrinsic Functions and Outputs.

Look at template example here: https://s3.amazonaws.com/cloudformation-templates-us-east-1/WordPress_Single_Instance_With_RDS.template

Some notes:

- When creating a stack, the stack parameter values will be prompted for on AWS Console during steps

- When creating, status goes from CREATE_IN_PROGRESS to CREATE_COMPLETE

- When creating a stack, it will deploy all the subcomponents � AWS resources ie EC2, RDS, etc

- When deleting a stack, it will remove all subcomponents

- To update an existing stack, use AWS CLI and run: aws cloudformation update-stack (UpdateStack API) or can also be done through Console (uploads a new template file)

- There are some Windows AMI CloudFormation templates available on page 206

- For all stacks, the following views are available on AWS Console

o Overview

o Outputs (from template)

o Resources (AWS resources used)

o Events (logging)

o Template (the template)

o Parameters

o Tags

o Policy

The 6 top level Template Objects (declaration of AWS resources that make up the stack in JSON format):

- Format Version

- Description

- Parameters

- Mappings

- Resources (required � at least one resource defined, all other objects are optional)

- Output

Create a WordPress stack based on given S3 template

Result website was given in the Output section:

http://ec2-54-201-76-172.us-west-2.compute.amazonaws.com/wordpress

Wordpress setup with: solidfish / sfish

Manages an application on AWS, including lifecycle, provisioning, configuration, deployment, updates, monitoring and access control.

abc

Managed Hadoop Framework

For data mining � reserachers data analysts or other using Hadoop on top of EC2 and S3

abc

Orchestration for Data-Driven Workflows

abc

Managed Search Service

Workflow Service for Coordinating Application Components

Coordinate work across distributed application components � works similar to SQS

http://aws.amazon.com/swf/faqs/

Differences between SWF and SQS

- SWF is task-oriented, not message oriented

- Tasks are never duplicated

- Ease of use

- More details regarding the tasks

-

Message Queue Service that acts as a buffer between the producer and consumer. SQS messages (up to 64KB text based) can be sent and received by servers or application components within EC2 from anywhere on internet. Can have unlimited number of queues and supports unordered, at-least-once delivery of messages.

An example is using SQS for image encoding (where image is in S3):

- Asynchronously pull task message from queue

- Retrieve filename from message

- Process conversion

- Write image back to S3

- Create �task complete� task message on another queue

- Delete the original task message in original queue

- Check for more messages (loop)

Client can send or receive SQS messages at a rate of 5 � 50 messages per second. For higher performance scenarios, requester can call multiple messages (up to 10) in a single call. SQS messages can also have expiration dates set � anything from 1 hour to 14 days. Messages are retained until explicitly delete or automatically deleted upon expiration.

Email Sending Service

Monitors and manages emails

Metrics that Define Success:

- Bounce Rate (successful delivery or not)

- Compliant Rate (marked as spam)

- Content issues (content filters)

Best Practices

- Domain and from address reputation

-

Push Notification Service

There are two users:

- Publisher (producer)

- Subscriber (consumer)

Common SNS Scenarios:

- Fanout - Can be used with SQS to send messages to multiple areas simultaneously for multiprocessing

- Application / System Alerts

Email alerts

- Push Email / Text Messaging

Messages sent via SMS for updates, headlines, etc; could have links for users to respond

- Push Mobile

Messages sent to mobile apps for updates, alerts, etc; could have links for user response

Easy-to-use Scalable Media Transcoding

Abc

Abc

Abc

Abc

Abc

Abc

GovCloud is an isolated region designed for US government agencies with sensitive data that adheres to US International Traffic in Arms Regulations (ITAR). Data contain all categories of Controlled Unclassified Information (CUI). Physical and logical administration is done by U.S. persons only. Authentication is also separate from other AWS resources with different accounts and vetting process (to verify users are U.S. persons). Multi-Factor Authentication (MFA) is recommended for GovCloud users.

The following are available in GovCloud:

Application Services

- Simple Notification Service (SNS)

- Simple Queue Service (SQS)

- Simple Workflow Service (SWF)

Compute

- Elastic Compute Cloud (EC2)

o Provisioned IOPS EBS store volumes not supported

o EBS optimized not supported

o

- Elastic MapReduce (EMR)

- Auto Scaling

- Elastic Load Balancing

Database

- DynamoDB

- Relational Database Service (RDS)

Deployment and Management

- Identity and Access Management (IAM)

o Users in GovCloud are specific to this region only and not exist elsewhere or normal AWS regions

- CloudWatch

- CloudFormation

- Management Console for GovCloud

Networking

- Virtual Private Cloud (VPC)

- Direct Connect

Storage

- Elastic Block Store (EBS)

- Simple Storage Service (S3)

o Cannot direct copy contents from GovCloud to another AWS region

Support

- Customer support

GovCloud Best Practices

Use Direct Connect into the GovCloud � which comes in two flavors � with VPN or no VPN. ITAR data require VPN connections (since everything gets encrypted).

CloudFront can be used with GovCloud � this is normal for CloudFront since its origin source can be non AWS resources and since GovCloud is outside the� normal AWS, CloudFront can easily access it.

Amazon Resource Names (ARNS)

In GovCloud, the ARNs are slightly different:

arn:aws���������� vs��� arn:aws-us-gov (or if a specific region = arn:aws-us-gov-west-1)

arn:aws-us-gov:dynamodb:us-gov-west-1:1234566:table/books_table