These are some general notes regarding High Performance Computing (HPC).

Purpose

HPC focuses on solving problems where a single system might have difficulty completing it in a timely manner. Instead, more computing power is needed to solve such problems. HPC is not only about improving the system/hardware performance but also optimizations at the software level.

HPC doesnt always require multiple systems or processors. Depending on the problem, the solution can be done in one or each of the following processor setups.

- Single Core SIMD

- Multi-Core / Multithreading

- Declarative approach/Implicit (OpenMP)

- Imperative approach/Explicit (std::thread, pthread)

- Library approach (Intel TBB/Microsoft PPL)

- Clusters – many systems

- Use of Message Processing Interface (MPI)

- Processor Accelerators

- GPU (OpenCL, CUDA)

- Hardware Accelerators (ASIC/FPGA)

Parallelism and Concurrency

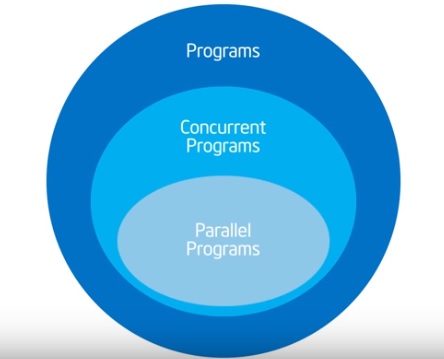

Often when speaking of HPC we talk about parallelism. How does this differ from concurrency?

- Concurrency is a condition where multiple tasks are logically active at one time

- Parallelism is a condition where multiple tasks are actually active at one time

Having concurrency does not mean we have parallelism, each task can be on it’s own thread but running in sequential time. However, having parallelism does mean we have concurrency, each task is on its own thread and running at the same time.

Expose the concurrency of a problem and then make sure they run in parallelism. Note though that not all problems can run in parallel, some may require concurrency and some may just require flat sequential.

Multimedia type processing

Multimedia processing has similar requirements for high performance computing due to its fine grain data level parallelism processing. In other words, the rendering of images is done using the same operation but with different input values that generate different outputs. Since the operation itself is the same, it can be done in parallel to process multiple inputs and generate multiple outputs. In graphics processing a ‘divide and conquer’ approach is taken to process many sections of the image in parallel.

Single Instruction Multiple Data – SIMD

SIMD focuses on the computation instructions and optimizing the processing of those instructions. This focus allows us to increase performance without hardware dependency. It performs same instruction (like a program function) on multiple data inputs in parallel to generate multiple outputs.

Note that there are different types of instructions and each can have different costs. Costs are the number clock cycles for the computation to complete. These costs may vary depending on the CPU/hardware such as float vs double. For example on a given CPU the costs could look like this:

add < multiply < divide < square root

In many cases the compiler is able to optimize simple instructions such as those above. However, more complex computations may require the programmer to write the code carefully.

In languages like C++ we can code for low level instruction processing using inline assembly. Here we’re able to assign specific register locations and call assembly methods such as ‘mov’ or arithmetic instructions like ‘mulps’ (multiply) etc. More examples here.

https://en.wikibooks.org/wiki/X86_Assembly/SSE

There are wrappers for assembly instructions called intrinsics. These are shortcuts that can be used instead of writing out the lower level inline assembly. SIMD also allows vectorized operations using intrinsics in a parallel computing architecture. In other words, a single function can be called multiple times in parallel using a vector of inputs which generates a vector of outputs.

Open Multi-Processing (OpenMP)

OpenMP is an API for decorating code for declarative parallelism. It defines what instructions can be done in parallel. It has a dependency on the compiler and requires appropriate OpenMP libraries. It works on many OSs / architectures. It is popular on C, C++ and Fortran.

Imperative Parallelism

- Separate data set into several independent parts

- Create threads for each parts

- Run computation on each thread

- Use of libraries (based on hardware vendor)

Declarative Parallelism

- Code is written as sequential but has decorations that compiler uses to determine where multi threading should occur.

- Relies on compiler

OpenMP uses multithreading for its parallel processing. It has a master thread that spins off multiple task threads. This master thread handles things like work sharing, synchronization and data sharing.

Work Sharing

We can use ‘sections’ or blocks of code / instructions where each can run in its own thread. For example – a ‘for’ loop can be defined to run in parallel. In the example below each work (addition instruction) would be done in parallel for whole array.

#pragma omp parallel for

for (size_t i = 0; i < length; i++)

{

result[i] = a[i] + b[i];

}

Synchronization

With multiple instructions being processed in parallel we will need some form of synchronization. OpenMP has the following decorations for performing synchronization.

- critical = block executed by one thread at a time

- atomic = next memory update is atomic (computation as whole must complete, else all ignored)

- ordered = block executed in same order as if it were sequential

- barrier = all threads wait until each reach this point in code

- nowait = threads can proceed without waiting for other threads

Data Sharing

Another concern in parallel processing is sharing of data. This is done through variables and OpenMP has variables decorators for controlling these variables. Some keywords for data sharing are:

- shared = all items accessible by all threads simultaneously (by default all variables are shared except for the loop counter)

- private = item is thread-local, not available to other threads

- firstprivate = this is used within a thread scope but sets to the original value from it’s parent’s scope

- last private = like firstprivate but the parent’s scope variable is updated upon child thread completion

- default = defines whether shared or not by default (by default variables are shared)

Message Passing Interface (MPI)

When having mutiple systems having to work in parallel we need to use some form of inter processor communication (IPC). MPI protocol helps with this. It allows communication of several machines, each having one or more GPU/GPU, working over a network.

Parallelism can be done at the following levels, where a computation can be done at one or all of these levels at the same time.

- Instruction

- Thread

- Process

Some libraries for implementing MPI are:

- OpenMPI

- MPICH

- Intel MPI Library

MPI supports two main communication types:

- Point-to-point Communication = Process A sends a message to process B

- Standard mode = returns as soon as message is sent

- Synchronous = returns only when exchange is complete

- Buffered = returns immediately but message sits in a buffer

- Ready = initiated only when receiving is setup, finishes immediately

- Examples

- MPI {I} [R,S,B] Send = send using Synchronous, Buffered and Ready

- MPI_{I} Recv

- Collective Communication = Process broadcasts message to pool

- MPI_Bcast = Broadcast, a single process can communicate with multiple other processes. This call is used for broadcasting and receiving.

- MPI_Barrier = Barrier Synchronization, ensures all processes reach this point

- MPI_{All}gather{v} = gets data from group of processes

- MPI_Scatter{v} = sends data from process to eery other process

- MPI_{All}Reduce{_scatter} = performs a reduction operation

Accelerated Massive Parallelism (AMP)

GPU computing is very popular for parallel workloads. It uses the SIMT (single insruction multiple threads) programming model. The two main players for GPU computing are NVIDIA and AMD. The following frameworks are available for these CPU computing platforms

- CUDA – NVIDIA only, created by NVIDIA

- OpenCL – Supports AMD, NVIDIA and Xeon Phi

- C++ AMP

- Compiler+ Library for Heterogeneous computing leveraging a GPU (NVIDIA/AMD)

- Uses mostly C++

Tiling

There are several different types of GPU memory, such as Global Memory, Thread-local Memory, and Shared (tile-static) Memory.

References

High Performance Computing using C++

https://app.pluralsight.com/player?course=cpp-high-performance-computing